DigitalGenius’s Head of AI Innovation from 2018-2023

26 Apr 2023

9.5 min read

Have you ever stumbled upon a TV show you had no idea existed but ended up becoming hooked on it after just one episode? That’s exactly what happened to me when I first watched Forged in Fire. The show is about 4 smiths competing with each other to build the best blade. While I used to think that a sword was just a piece of steel with a cutting edge, I realised that each sword version was there for a reason: every era and culture in the world had its way of thinking about sword fights and the swords needed to be adapted to culture, traditions, fighting style, technology. A Middle Age European longsword would not do any good during the era of Japanese samurais. Even if the base is steel, the way the sword is shaped, the handles, the curves, or the cutting edge, all have to be tuned to specific needs.

As a modern tool, Artificial intelligence follows similar rules. Large language models work the same way, having the same base and then being fine-tuned to fit a particular need.

If GPT is the steel, there are multiple ways to forge it to adapt it to different use cases and businesses. Tuning the base model to fit your business needs and customers is not an easy task. We will see that using the OpenAI off the shelf is rarely good enough for your business.

At DigitalGenius, our AI models from 8 years ago are very different from the models we develop now, from basic NLP to Large Language Models, we went through the quickly evolving AI landscape and had to adapt. Not to follow a trend but to solve the problems our customers face.

But before jumping into the GPT fine-tuning, maybe I should explain what the base material is in our case. Where does our steel (Artificial Intelligence) come from?

What is NLP?

Natural Language Processing (NLP) is a name given to all AI algorithms and methods to help process human languages.

Making the computer understand human language and getting knowledge from a text in a split second is a big challenge for NLP engineers. NLP is a broad term that goes from keyword detection to large language models like ChatGPT. A few years ago, companies automating customer service queries were still using keyword detection to detect the intent, but we were quick to realise that these methods led to low accuracy and low coverage which meant unsatisfied customers.

Understanding that issue led us to start utilising modern NLP techniques with Deep Neural Networks under the hood to make the machine understand human language and respond to customer queries.

Old NLP techniques

Language is complicated, it’s not a one-time invention but thousands of years of evolution, mixing with other languages and a lot of emotions, expressions, figures of speech, irony… Sometimes two people won’t agree on what a particular sentence means, so trying to make a computer understand human language can be very tricky.

An algorithm needs to understand the relations between words and how their places in a sentence can affect their meanings. That is why the research in that field is so vibrant as we always need more sophisticated techniques for NLP.

As of today, we can split NLP into 5 categories:

Keywords-based: that includes RegEx and rule-based methods

Linguistic: using syntax, morphology and grammar. These methods are usually used alongside keywords and machine learning models.

Classic Machine Learning models: these models can run on any computer but struggle when handling big sequential data and understanding relationships between words.

Deep Learning models and recurrent neural networks (RNN): They struggle to handle long texts as they have difficulty remembering long-term relationships between words and can become very slow to train

Large Language Models (LLMs) based on the Transformers Architecture.

We’re dealing with the last one here, so let’s explain what we mean by Transformers.

How Transformers changed the game

ChatGPT is a chatbot developed by OpenAI in 2022 that is built on top of GPT models (GPT-3 then GPT-3.5 and more recently GPT-4), the large language model based on the Transformers architecture. AI researchers from Google discovered transformers in December 2017, and they have been a huge breakthrough in NLP.

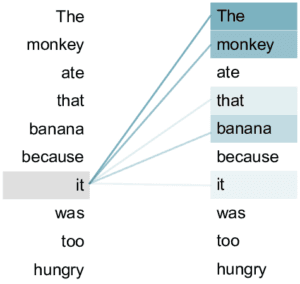

Transformers use the mechanism of self-attention, giving each word in the sentence importance based on the relationship between them. Transformers are trained with the task of masking words in an input sentence and trying to find out what the words are. It’s a long process of trial and error.

Then, training the model on millions of texts helps the model learn language specifics. In this example, after training the model we notice the relation between “it” and the words “the monkey” will be given greater importance than “it” and “the banana”.

Old recurrent neural networks were struggling to do that and tended to give importance only to words close to each other.

GPT models were trained that way with millions and millions of texts. These texts, in the large majority of cases, come from the internet. OpenAI, the company behind GPT, used Reddit, Wikipedia and many other data from crawling the internet.

ChatGPT, Generative Question Answering

Just like steel is hardened and then shaped into a sword, these Transformers can then be re-trained or fine-tuned on a large number of conversations allowing the model to learn how to have a conversation and answer with the “most likely” response. That is how ChatGPT was trained on top of GPT.

Then it was trained again using reinforcement learning techniques helping the model to give the type of answers the engineers wanted it to give and also not answering certain types of questions. The huge variety of data it was trained on and the question-answering task gives impressive and accurate results.

There is a common misconception saying that the model stores the data it has learnt from the internet and just copies and pastes it to answer questions. The model does not store any data, rather it’s learning knowledge with a numerical representation.

The model is very good at understanding language specificities, generating general responses, and rephrasing. We find it struggles when we want the algorithm to be more specific and respond to precise domain queries. It will not be able to answer precise product recommendations or be up to date with the latest products unless it is given precise context or dynamically retrained on domain data, which it is currently not. ChatGPT is trained to have an answer to every question it is asked so it can start hallucinating and give confusing answers.

Students writing essays have been caught out when ChatGPT has invented academic papers in its answers. Because the answers it gives appear plausible, people have taken what it says at face value as the truth, even when the answers contain these hallucinations.

Impact of LLMs on Businesses

In any business, it’s important to understand what AI skill or solution is appropriate for different use cases. Occasional users can appreciate the generative capabilities of ChatGPT and have a conversation, but professionally, when users talk to a chatbot, they are looking for an answer to their question or a solution to resolve their problem.

Not all Large Language models are generative though, it’s important to understand that it’s not a ChatGPT revolution but a Large Language Model revolution. At DigitalGenius we have already leveraged these models for multiple skills like Intent Detection (What topic is a user asking about?), Entity Extraction (What is the order number or product?), Sentiment analysis (What is the tone of the user?) or even Translation.

It’s important to understand what you are looking for, or what weapon you want to forge. Maybe you need a knife, not an axe!

Understanding your data and your use cases is the first step you need to go through before trying to integrate a Language Model. For customer service, you might think integration with ChatGPT with some tweaking is enough but it’s not.

To automate customer messages we need more than a chatbot – we need intent detection and integrations with multiple APIs. If a customer wants to know something about the order they just placed, your tool needs to be able to extract the relevant information – order numbers, shipping tracking links, discount codes – before it answers.

That’s why in DigitalGenius we have created Flow on top of intent detection to automate the complex process automation that will be different for each company.

The capabilities of LLMs to understand language have changed the game, it’s easy to use OpenAI’s API with a prompt that will orient the answer to fit the business. It’s easy to ask ChatGPT to classify a text to fit multiple categories or even to ask him to classify a sentiment. No need for integration, just an API call. Or at least that is the dream. Is it really that easy?

The best ways to use ChatGPT

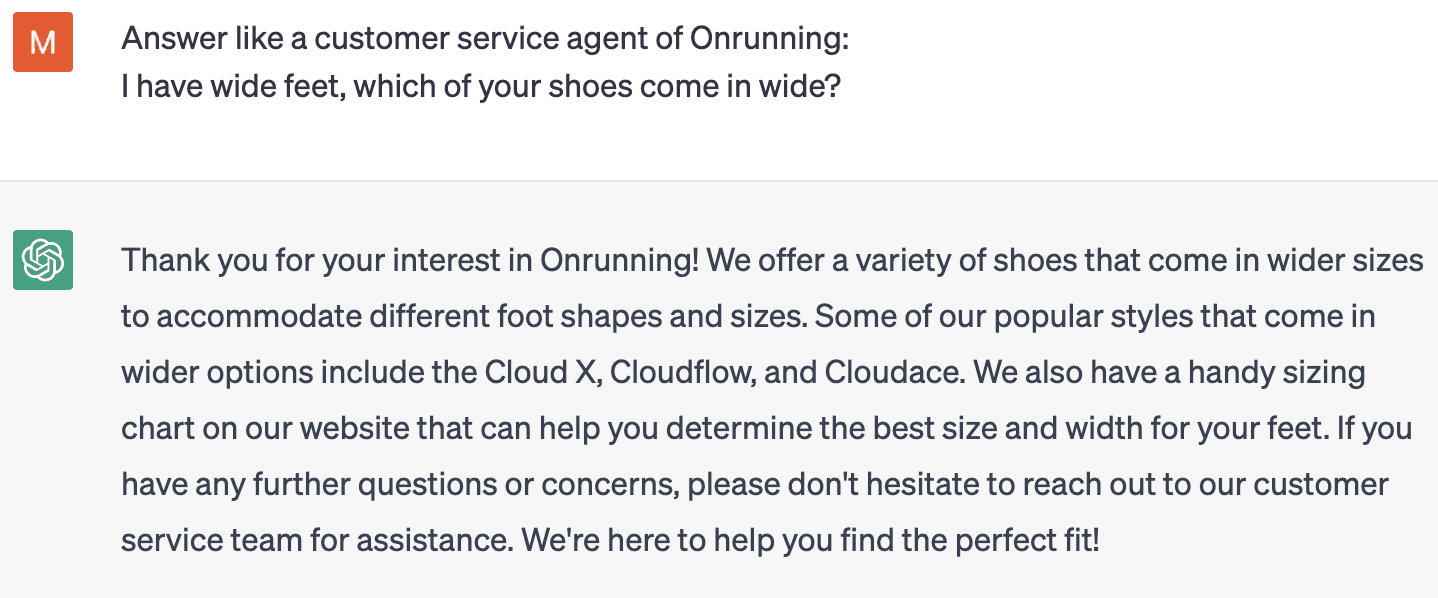

Let’s play with it a bit:

In the example above, you can see how we can play with the instructions to modify the chatGPT answer to the need. It looks great but we still need to be extra careful with hallucinations. Here the answer is wrong, On does not provide wide options for the Cloud X model (at least not at the date I wrote this article) also there exist more models in wide. ChatGPT shows its limits when we ask it to answer specific domain questions. Also, the model is trained on outdated data, what would happen if they release a new wide shoe option?

We can solve that problem in different ways.

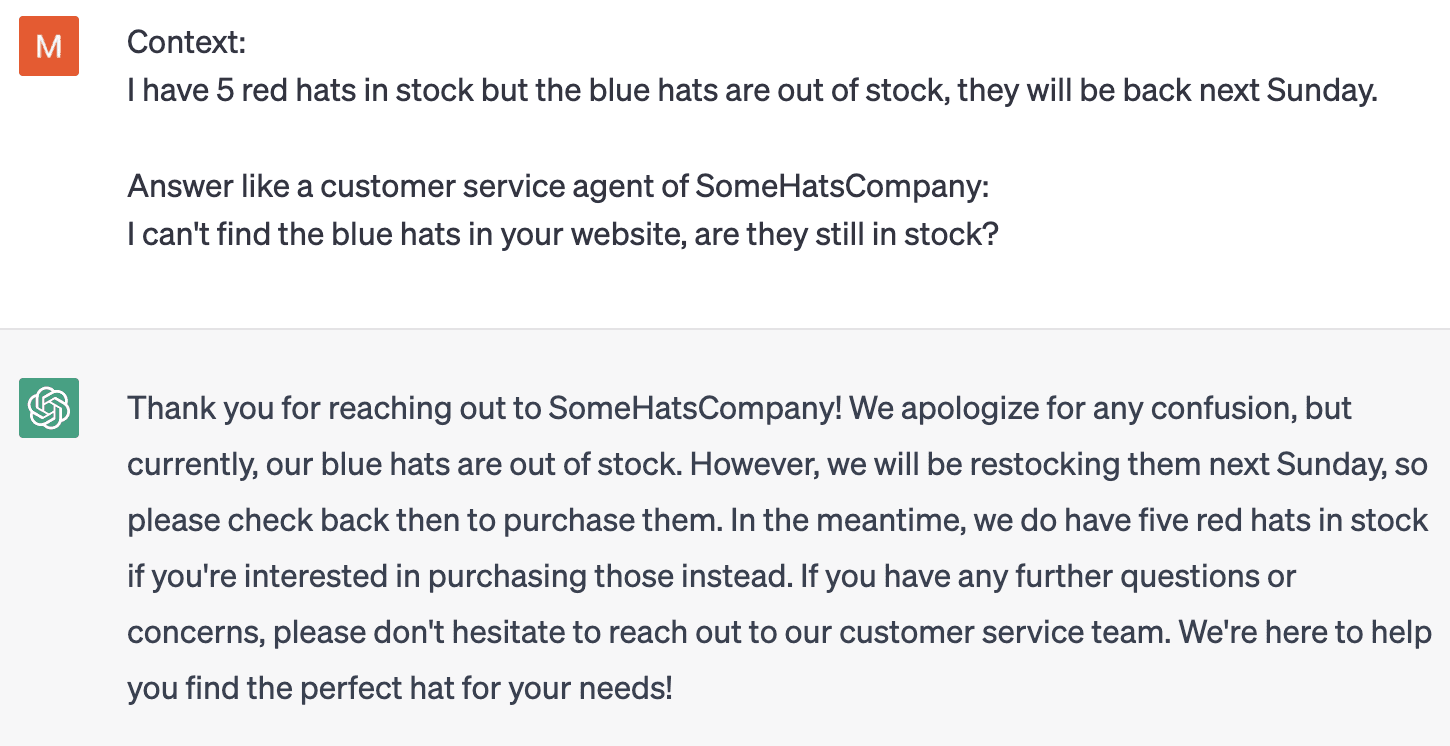

Use context

Here it works well if we explain to chatGPT how it should answer. This will usually work well but if you have thousands of products, don’t count on it. ChatGPT has a size input limit and it tends to be not so accurate with long context.

Use NLP techniques on top of ChatGPT

A good way to fix that input size limit would be to choose what text you want to put in the context. If a user is asking a specific product question, we would want to automatically add that specific product description to the context. Information retrieval would be the way to go but you will need NLP engineers to build that correctly.

Train on top of a Generative Question-Answering model

If you have enough data from past conversations, you can train on top of an existing generative question-answering model. If GPTx models are not open source, a lot of open-source alternatives exist that work as well: GPTJ, GPT NeoX, Bloom…

Pre-training it a second time on domain data could help the model to be more precise, not hallucinate and make up wrong answers. It could also help answer using the style you want.

Conclusion

In conclusion, transformers have revolutionised the field of natural language processing and have enabled models like ChatGPT to be more advanced and capable than ever before. However, it’s important to note that while ChatGPT is a remarkable tool, it needs to be used with caution when used as a tool for automation. Adapting it correctly to the context and purpose of the business is crucial for achieving optimal results.

The AI team at DigitalGenius is currently working on these use cases by collaborating with product experts. Don’t hesitate to contact us to build an AI that, as the legend Doug Marcaida says, will kill.

To learn a bit more about ChatGPT and the impact it has on Conversation Design, watch our webinar on Large Language Models here.